About Me

I am an AI researcher. Prior to this, I was a postdoctoral researcher at Imperial College London. I received my PhD from the University of Manchester and previously worked as a research intern at Microsoft Research. My research focuses on generative modelling, with a recent emphasis on foundation models and diffusion modelling, particularly for healthcare applications. My work has been published in top conferences such as NeurIPS, ICML, KDD, ACL, EMNLP, and NAACL, and has collectively received over a hundred citations.

News

- (First Author) Our papers MIRA: Medical Time Series Foundation Model for Real-World Health Data have been accepted to NeurIPS 2025 !

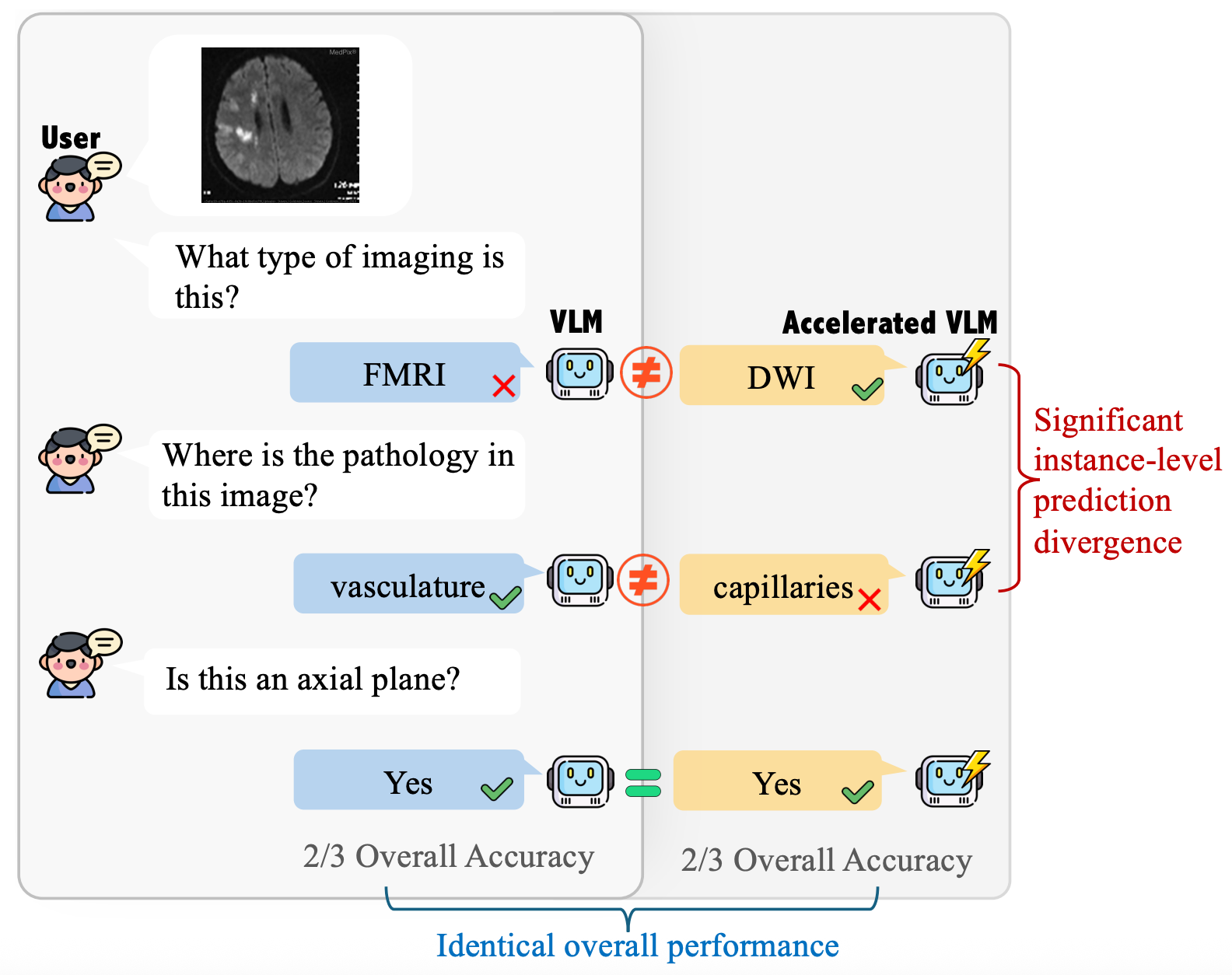

- Our papers Does Acceleration Cause Hidden Instability in Vision Language Models? Uncovering Instance-Level Divergence Through a Large-Scale Empirical Study have been accepted to EMNLP 2025 !

- Our papers TarDiff: Target-Oriented Diffusion Guidance for Synthetic Electronic Health Record Time Series Generation Diffusion Models have been accepted to KDD 2025 !

- (First Author) Our paper BRIDGE: Bootstrapping Text to Control Time-Series Generation via Multi-Agent Iterative Optimization and Diffusion Modelling has been accepted to ICML 2025 !

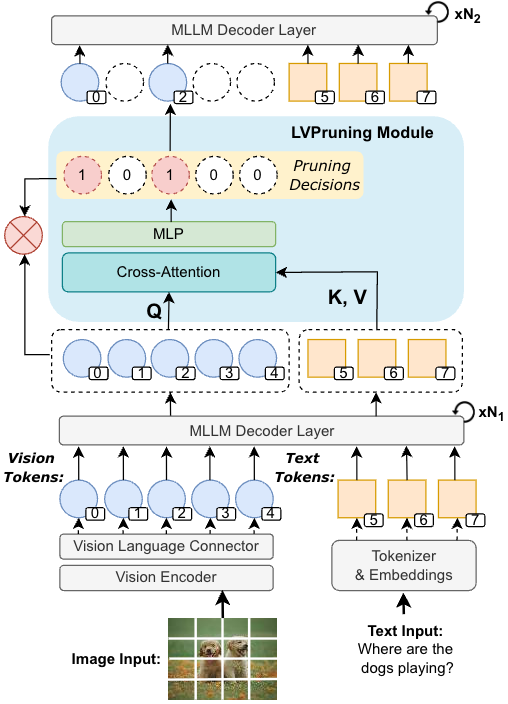

- Our papers LVPruning: An Effective yet Simple Language-Guided Vision Token Pruning Approach for Multi-modal Large Language Models have been accepted to NAACL 2025 !

Research Statement

Foundation Models

My core research focuses on developing foundation models that generalize across tasks, modalities, and domains. I explore modular architectures—such as sparse experts, adaptive attention, and continuous-time representations—that can support forecasting, reasoning, and generation across diverse data types including time-series, images, and texts.

Generative Modelling

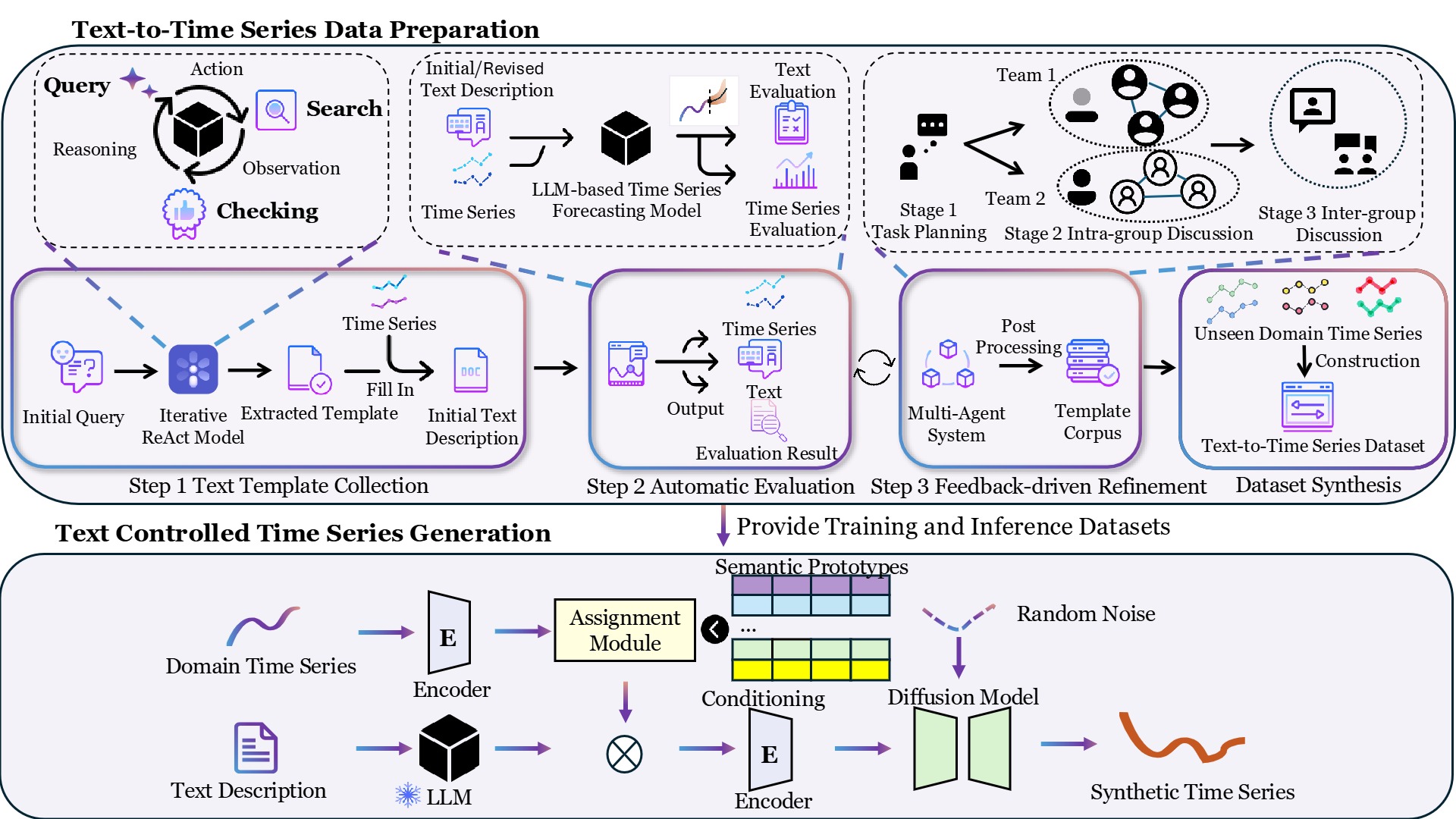

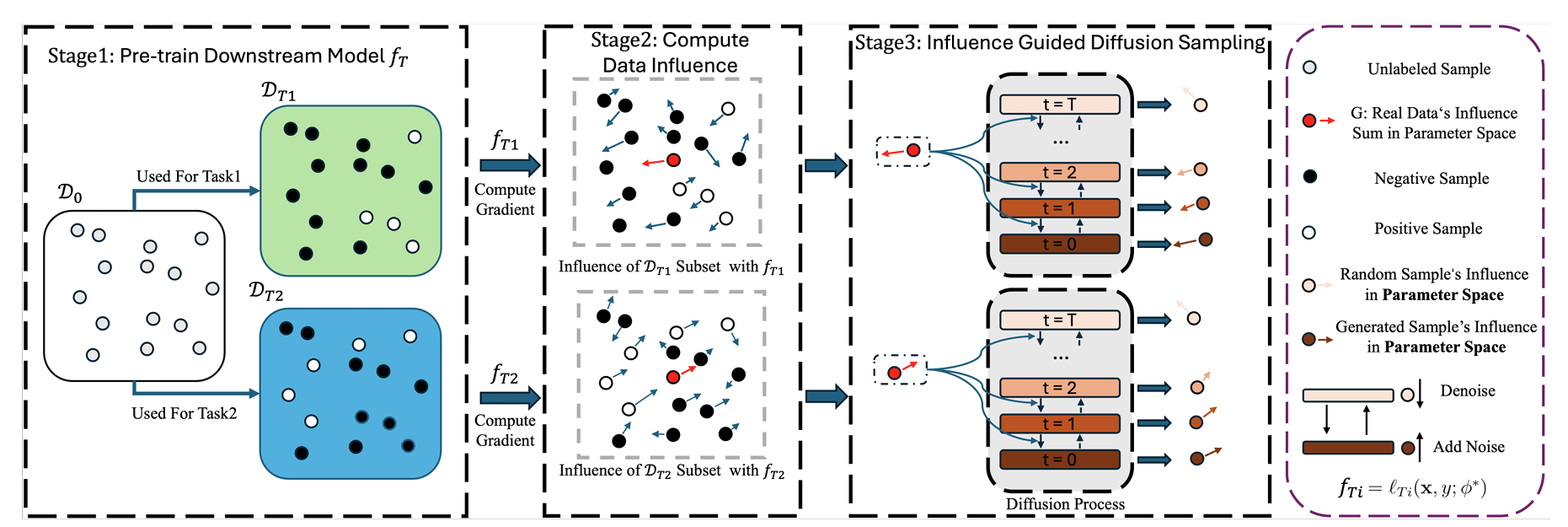

I design generative systems that synthesize structured data such as multivariate time series or clinical signals from natural language. My work includes diffusion-based models that allow fine-grained control over the output, enabling precise and faithful generation for simulation, augmentation, and decision support.

Health AI

I work on building trustworthy AI systems for healthcare, with a particular focus on modeling real-world clinical data such as EHRs, physiological signals (e.g., ECG), and longitudinal records. My research aims to support clinical reasoning, outcome prediction, and health system optimization.

LLM Evaluation & Attribution

I investigate how large language models perform across tasks, domains, and inputs, with a focus on robustness and attribution. My work includes instance-level analysis of LLM behavior, tracing dataset influence on model predictions, and identifying failure modes in biomedical or instruction-following settings.

Cross-modal Representation Learning

I study how representations can be aligned across modalities—such as language, vision, and structured signals—to enable joint reasoning and transfer. My work explores cross-modal distillation, token pruning, and contrastive alignment for both general-purpose LLMs and medical multimodal applications.

Research Works

Publications ( show selected / show all by date / show all by topic )

Topics: Foundation Model / Generative Modelling / Health AI / LLM Evaluation & Attribution / Cross-modal Representation Learning / NLP & Argument Mining (* indicates equal contribution)

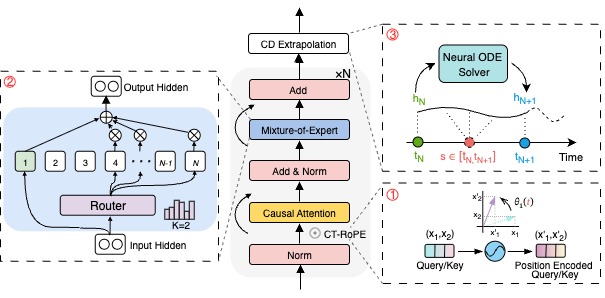

MIRA: Medical Time Series Foundation Model for Real-World Health Data

Hao Li, Bowen Deng, Chang Xu, Zhiyuan Feng, Viktor Schlegel, Yu-Hao Huang, Yizheng Sun, Jingyuan Sun, Kailai Yang, Yiyao Yu, Jiang Bian

BRIDGE: Bootstrapping Text to Control Time-Series Generation via Multi-Agent Iterative Optimization and Diffusion Modelling

Hao Li, Yu-Hao Huang, Chang Xu, Viktor Schlegel, Renhe Jiang, Riza Batista-Navarro , Goran Nenadic, Jiang Bian

TarDiff: Target-Oriented Diffusion Guidance for Synthetic Electronic Health Record Time Series Generation

Bowen Deng, Chang Xu, Hao Li,Yu-Hao Huang, Min Hou, Jiang Bian

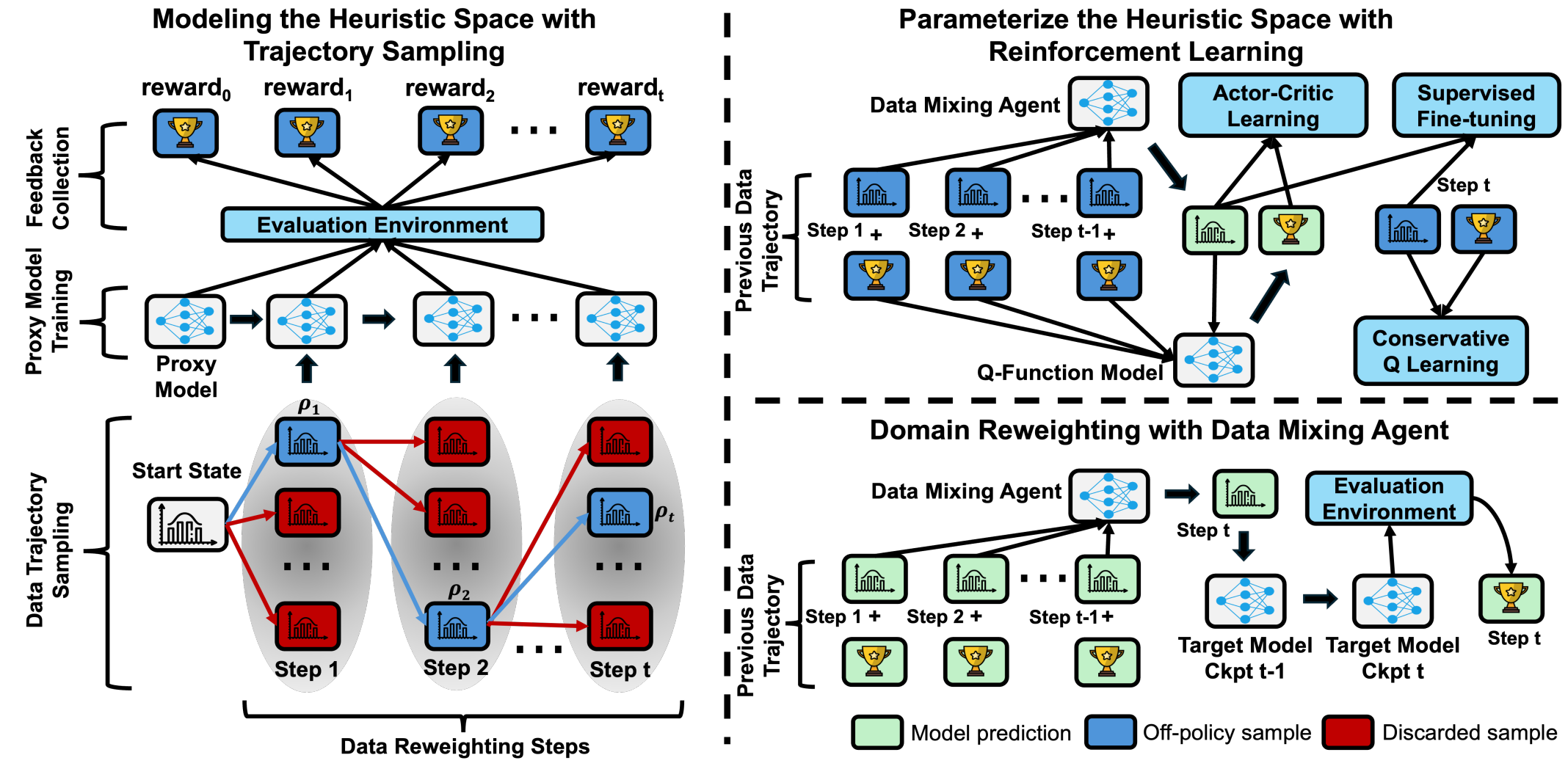

Data Mixing Agent: Learning to Re-weight Domains for Continual Pre-training

Kailai Yang, Xiao Liu, Lei Ji, Hao Li, Yeyun Gong, Peng Cheng, Mao Yang

Does Acceleration Cause Hidden Instability in Vision Language Models? Uncovering Instance-Level Divergence Through a Large-Scale Empirical Study

Yizheng Sun, Hao Li, Chang Xu, Hongpeng Zhou, Chenghua Lin, Riza Batista-Navarro, Jingyuan Sun

LVPruning: An Effective yet Simple Language-Guided Vision Token Pruning Approach for Multi-modal Large Language Models

Yizheng Sun, Yanze Xin, Hao Li, Jingyuan Sun, Chenghua Lin, Riza Batista-Navarro

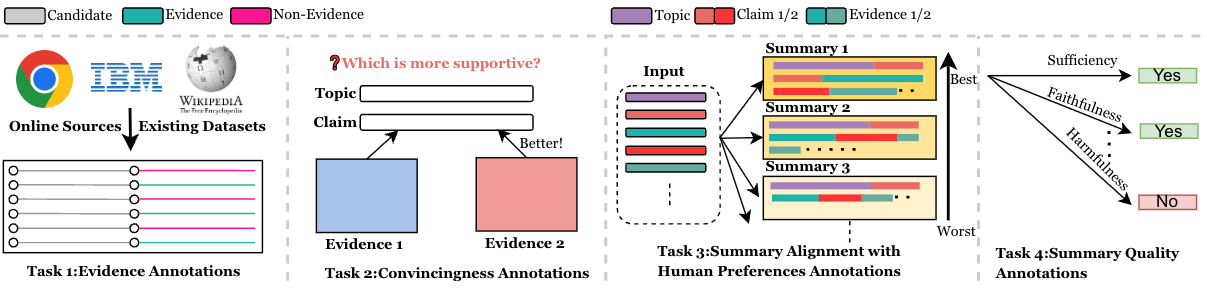

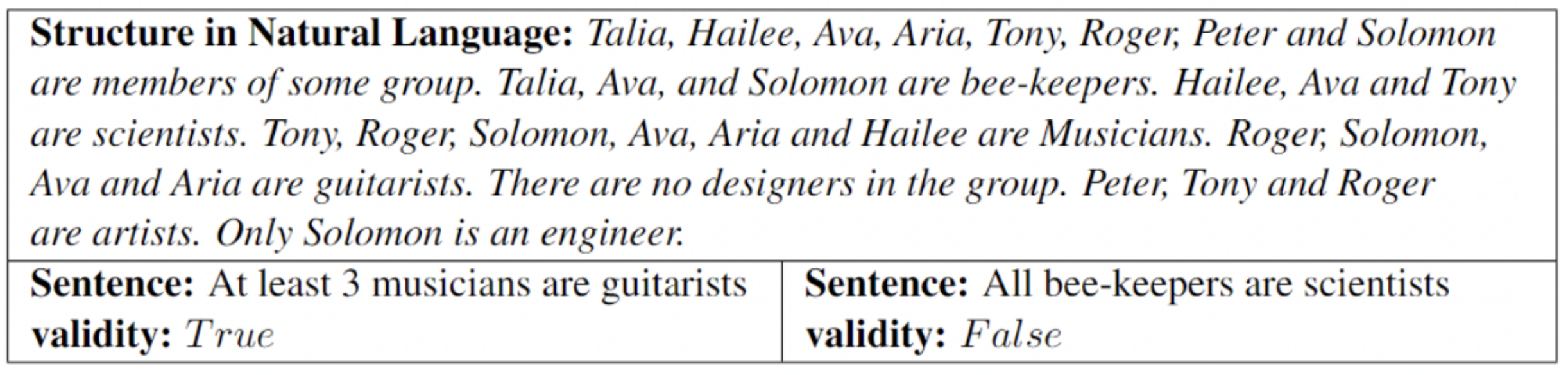

Which Side Are You On? A Multi-task Dataset for End-to-End Argument Summarisation and Evaluation

Hao Li, Yuping Wu, Viktor Schlegel, Riza Batista-Navarro, Tharindu Madusanka, Iqra Zahid, Jiayan Zeng, Xiaochi Wang, Xinran He, Yizhi Li, Goran Nenadic

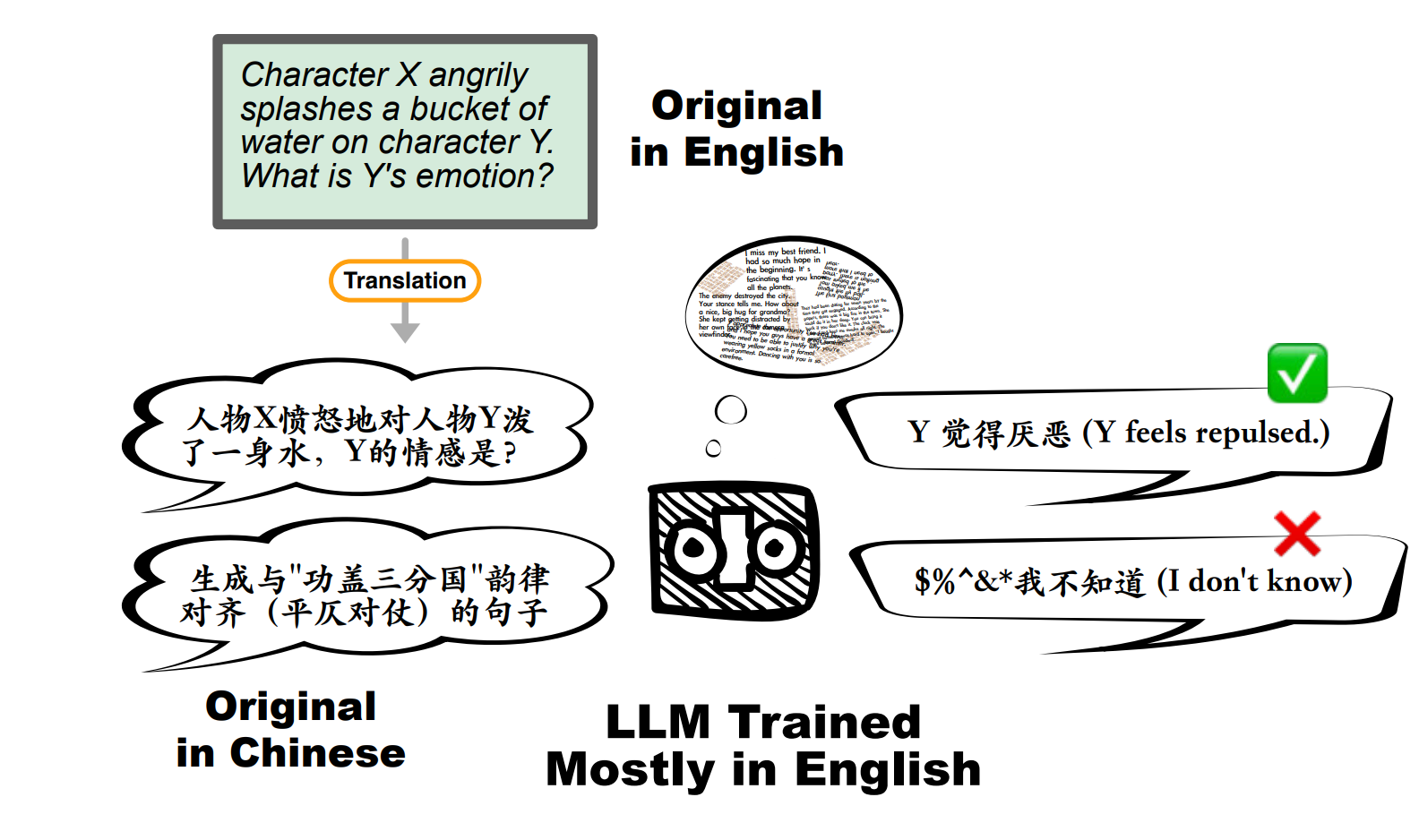

CIF-Bench: A Chinese Instruction-Following Benchmark for Evaluating the Generalizability of Large Language Models

Yizhi Li, Ge Zhang, Xingwei Qu, Jiali Li, Zhaoqun Li, Zekun Wang, Hao Li, Ruibin Yuan, Yinghao Ma, Kai Zhang, Wangchunshu Zhou, Yiming Liang, Lei Zhang, Lei Ma, Jiajun Zhang, Zuowen Li, Stephen W Huang, Chenghua Lin, Wenhu Chen, Jie Fu

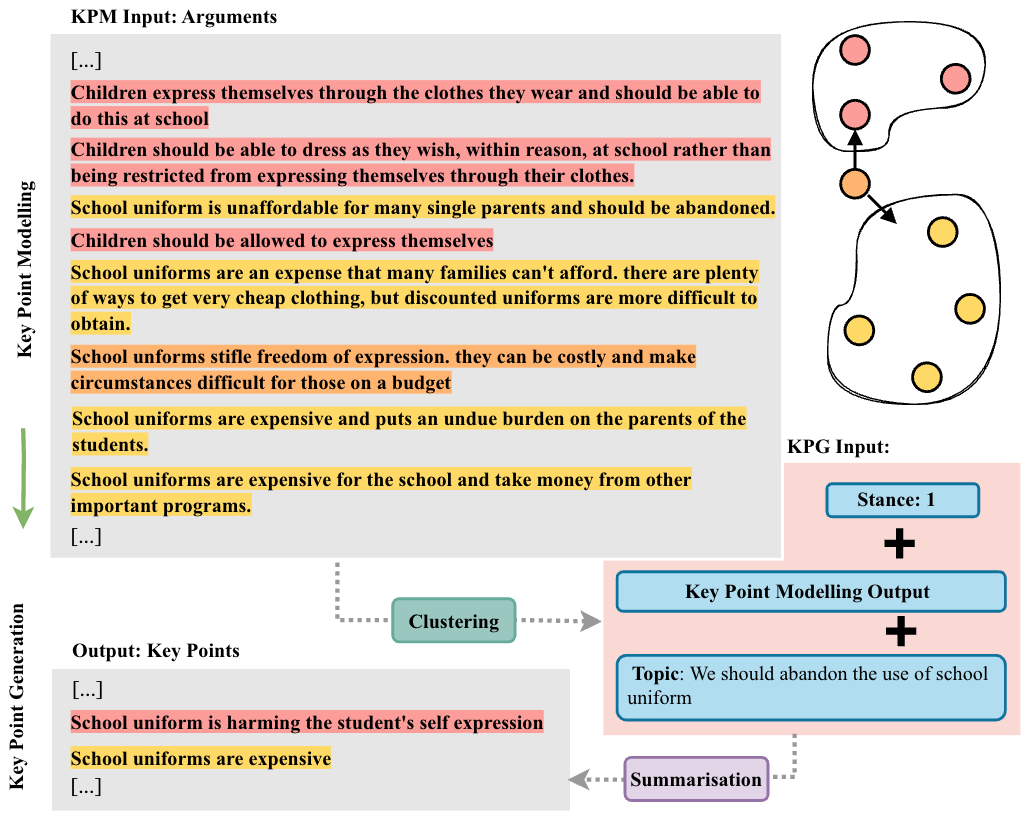

Do You Hear the People Sing? Key Point Analysis via Iterative Clustering and Abstractive Summarisation

Hao Li, Viktor Schlegel, Riza Theresa Batista-Navarro, Goran Nenadic

Not All Quantifiers Are Equal: Probing Transformer-based Language Models’ Understanding of Generalised Quantifiers

Tharindu Madusanka, Iqra Zahid, Hao Li, Ian Pratt-Hartmann, Riza Batista-Navarro

Visitor Analytics

Real-time visitor tracking and global reach visualization

Global Visitor Map

Loading visitor data...